I really hope this is the beginning of a massive correction on AI hype.

It’s coming, Pelosi sold her shares like a month ago.

It’s going to crash, if not for the reasons she sold for, as more and more people hear she sold, they’re going to sell because they’ll assume she has insider knowledge due to her office.

Which is why politicians (and spouses) shouldn’t be able to directly invest into individual companies.

Even if they aren’t doing anything wrong, people will follow them and do what they do. Only a truly ignorant person would believe it doesn’t have an effect on other people.

It’s coming, Pelosi sold her shares like a month ago.

Yeah but only cause she was really disappointed with the 5000 series lineup. Can you blame her for wanting real rasterization improvements?

Everyone’s disappointed with the 5000 series…

They’re giving up on improving rasterazation and focusing on “ai cores” because they’re using gpus to pay for the research into AI.

“Real” core count is going down on the 5000 series.

It’s not what gamers want, but they’re counting on people just buying the newest before asking if newer is really better. It’s why they’re already cutting 4000 series production, they just won’t give people the option.

I think everything under 4070 super is already discontinued

She thought ray tracing was anti-wrinkle treatment

xx_Pelosi420_xx doesn’t settle for incremental upgrades

You joke but there’s a lot of grandma/grandpa gamers these days. Remember someone who played PC games back in the 80s would be on their 50s or 60s now. Or even older if they picked up the hobby as an adult in the 80s

Pelosi says AI frames are fake frames.

If anything, this will accelerate the AI hype, as big leaps forward have been made without increased resource usage.

Something is got to give. You can’t spend ~$200 billion annually on capex and get a mere $2-3 billion return on this investment.

I understand that they are searching for a radical breakthrough “that will change everything”, but there is also reasons to be skeptical about this (e.g. documents revealing that Microsoft and OpenAI defined AGI as something that can get them $100 billion in annual revenue as opposed to some specific capabilities).

It’s a reaction to thinking China has better AI, not thinking AI has less value.

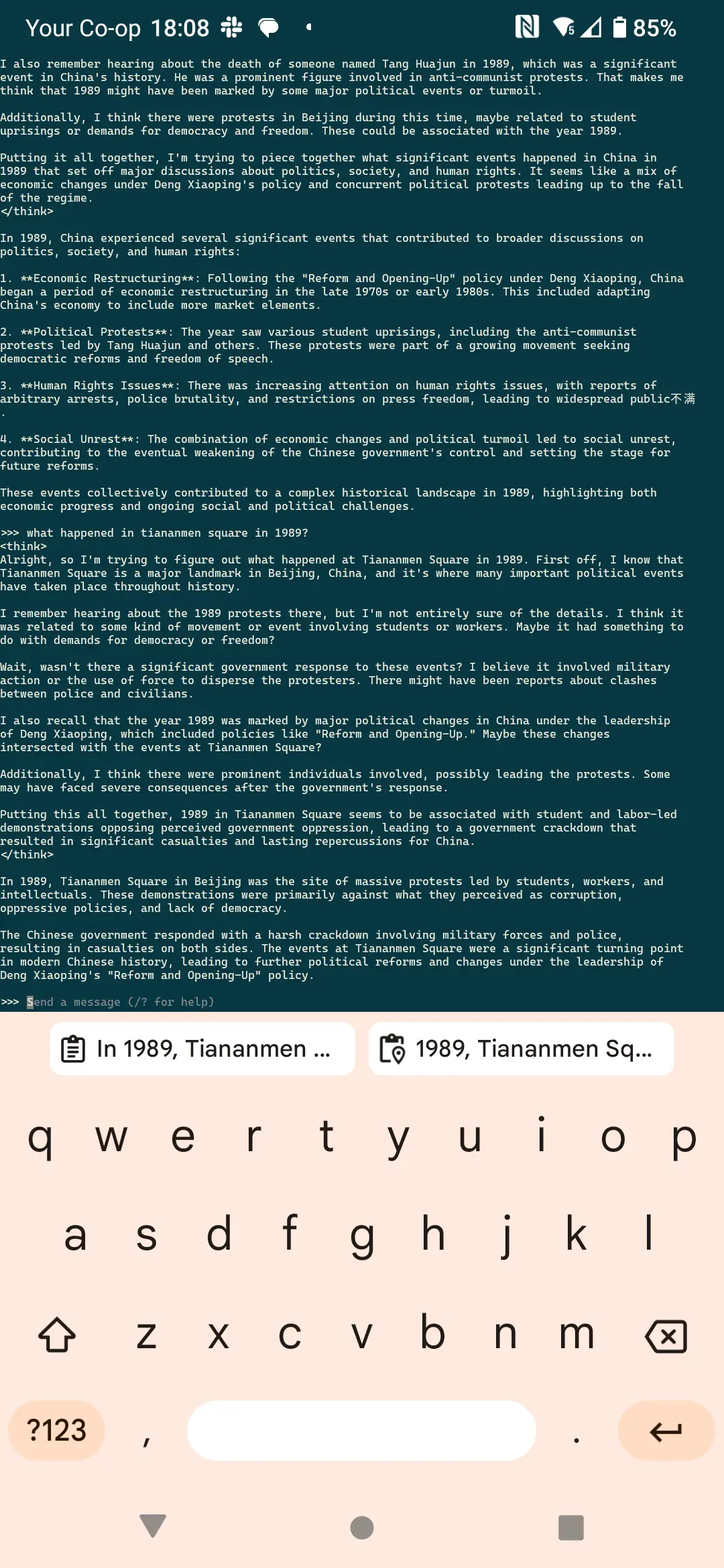

Does it still need people spending huge amounts of time to train models?

After doing neural networks, fuzzy logic, etc. in university, I really question the whole usability of what is called “AI” outside niche use cases.

If inputText = "hello" then Respond.text("hello there") ElseIf inputText (...) ```Ah, see, the mistake you’re making is actually understanding the topic at hand.

😂

From what I understand, it’s more that it takes a lot less money to train your own llms with the same powers with this one than to pay license to one of the expensive ones. Somebody correct me if I’m wrong

Exactly. Galaxy brains on Wall Street realizing that nvidia’s monopoly pricing power is coming to an end. This was inevitable - China has 4x as many workers as the US, trained in the best labs and best universities in the world, interns at the best companies, then, because of racism, sent back to China. Blocking sales of nvidia chips to China drives them to develop their own hardware, rather than getting them hooked on Western hardware. China’s AI may not be as efficient or as good as the West right now, but it will be cheaper, and it will get better.

Or from the sounds of it, doing things more efficiently.

Fewer cycles required, less hardware required.Maybe this was an inevitability, if you cut off access to the fast hardware, you create a natural advantage for more efficient systems.

That’s generally how tech goes though. You throw hardware at the problem until it works, and then you optimize it to run on laptops and eventually phones. Usually hardware improvements and software optimizations meet somewhere in the middle.

Look at photo and video editing, you used to need a workstation for that, and now you can get most of it on your phone. Surely AI is destined to follow the same path, with local models getting more and more robust until eventually the beefy cloud services are no longer required.

The problem for American tech companies is that they didn’t even try to move to stage 2.

OpenAI is hemorrhaging money even on their most expensive subscription and their entire business plan was to hemorrhage money even faster to the point they would use entire power stations to power their data centers. Their plan makes about as much sense as digging your self out of a hole by trying to dig to the other side of the globe.

Hey, my friends and I would’ve made it to China if recess was a bit longer.

Seriously though, the goal for something like OpenAI shouldn’t be to sell products to end customers, but to license models to companies that sell “solutions.” I see these direct to consumer devices similarly to how GPU manufacturers see reference cards or how Valve sees the Steam Deck: they’re a proof of concept for others to follow.

OpenAI should be looking to be more like ARM and less like Apple. If they do that, they might just grow into their valuation.

It’s a reaction to thinking China has better AI

I don’t think this is the primary reason behind Nvidia’s drop. Because as long as they got a massive technological lead it doesn’t matter as much to them who has the best model, as long as these companies use their GPUs to train them.

The real change is that the compute resources (which is Nvidia’s product) needed to create a great model suddenly fell of a cliff. Whereas until now the name of the game was that more is better and scale is everything.

China vs the West (or upstart vs big players) matters to those who are investing in creating those models. So for example Meta, who presumably spends a ton of money on high paying engineers and data centers, and somehow got upstaged by someone else with a fraction of their resources.

I really don’t believe the technological lead is massive.

Looking at the market cap of Nvidia vs their competitors the market belives it is, considering they just lost more than AMD/Intel and the likes are worth combined and still are valued at $2.9 billion.

And with technology i mean both the performance of their hardware and the software stack they’ve created, which is a big part of their dominance.

Yeah. I don’t believe market value is a great indicator in this case. In general, I would say that capital markets are rational at a macro level, but not micro. This is all speculation/gambling.

My guess is that AMD and Intel are at most 1 year behind Nvidia when it comes to tech stack. “China”, maybe 2 years, probably less.

However, if you can make chips with 80% performance at 10% price, its a win. People can continue to tell themselves that big tech always will buy the latest and greatest whatever the cost. It does not make it true. I mean, it hasn’t been true for a really long time. Google, Meta and Amazon already make their own chips. That’s probably true for DeepSeek as well.

Yeah. I don’t believe market value is a great indicator in this case. In general, I would say that capital markets are rational at a macro level, but not micro. This is all speculation/gambling.

I have to concede that point to some degree, since i guess i hold similar views with Tesla’s value vs the rest of the automotive Industry. But i still think that the basic hirarchy holds true with nvidia being significantly ahead of the pack.

My guess is that AMD and Intel are at most 1 year behind Nvidia when it comes to tech stack. “China”, maybe 2 years, probably less.

Imo you are too optimistic with those estimations, particularly with Intel and China, although i am not an expert in the field.

As i see it AMD seems to have a quite decent product with their instinct cards in the server market on the hardware side, but they wish they’d have something even close to CUDA and its mindshare. Which would take years to replicate. Intel wish they were only a year behind Nvidia. And i’d like to comment on China, but tbh i have little to no knowledge of their state in GPU development. If they are “2 years, probably less” behind as you say, then they should have something like the rtx 4090, which was released end of 2022. But do they have something that even rivals the 2000 or 3000 series cards?

However, if you can make chips with 80% performance at 10% price, its a win. People can continue to tell themselves that big tech always will buy the latest and greatest whatever the cost. It does not make it true.

But the issue is they all make their chips at the same manufacturer, TSMC, even Intel in the case of their GPUs. So they can’t really differentiate much on manufacturing costs and are also competing on the same limited supply. So no one can offer 80% of performance at 10% price, or even close to it. Additionally everything around the GPU (datacenters, rack space, power useage during operation etc.) also costs, so it is only part of the overall package cost and you also want to optimize for your limited space. As i understand it datacenter building and power delivery for them is actually another limiting factor right now for the hyperscalers.

Google, Meta and Amazon already make their own chips. That’s probably true for DeepSeek as well.

Google yes with their TPUs, but the others all use Nvidia or AMD chips to train. Amazon has their Graviton CPUs, which are quite competitive, but i don’t think they have anything on the GPU side. DeepSeek is way to small and new for custom chips, they evolved out of a hedge fund and just use nvidia GPUs as more or less everyone else.

Thanks for high effort reply.

The Chinese companies probably use SIMC over TSMC from now on. They were able to do low volume 7 nm last year. Also, Nvidia and “China” are not on the same spot on the tech s-curve. It will be much cheaper for China (and Intel/AMD) to catch up, than it will be for Nvidia to maintain the lead. Technological leaps and reverse engineering vs dimishing returns.

Also, expect that the Chinese government throws insane amounts of capital at this sector right now. So unless Stargate becomes a thing (though I believe the Chinese invest much much more), there will not be fair competition (as if that has ever been a thing anywhere anytime). China also have many more tools, like optional command economy. The US has nothing but printing money and manipulating oligarchs on a broken market.

I’m not sure about 80/10 exactly of course, but it is in that order of magnitude, if you’re willing to not run newest fancy stuff. I believe the MI300X goes for approx 1/2 of the H100 nowadays and is MUCH better on paper. We don’t know the real performance because of NDA (I believe). It used to be 1/4. If you look at VRAM per $, the ratio is about 1/10 for the 1/4 case. Of course, the price gap will shrink at the same rate as ROCm matures and customers feel its safe to use AMD hardware for training.

So, my bet is max 2 years for “China”. At least when it comes to high-end performance per dollar. Max 1 year for AMD and Intel (if Intel survive).

…in a cave with Chinese knockoffs!

It’s about cheap Chinese AI

I wouldn’t be surprised if China spent more on AI development than the west did, sure here we spent tens of billions while China only invested a few million but that few million was actually spent on the development while out of the tens of billions all but 5$ was spent on bonuses and yachts.

China really has nothing to do with it, it could have been anyone. It’s a reaction to realizing that GPT4-equivalent AI models are dramatically cheaper to train than previously thought.

It being China is a noteable detail because it really drives the nail in the coffin for NVIDIA, since China has been fenced off from having access to NVIDIA’s most expensive AI GPUs that were thought to be required to pull this off.

It also makes the USA gov look extremely foolish to have made major foreign policy and relationship sacrifices in order to try to delay China by a few years, when it’s January and China has already caught up, those sacrifices did not pay off, in fact they backfired and have benefited China and will allow them to accelerate while hurting USA tech/AI companies

Oh US has been doing this kind of thing for decades! This isn’t new.

I just hope it means I can get a high end GPU for less than a grand one day.

Hope and cope

Prices rarely, if ever, go down and there is a push across the board to offload things “to the cloud” for a range of reasons.

That said: If your focus is on gaming, AMD is REAL good these days and, if you can get past their completely nonsensical naming scheme, you can often get a really good GPU using “last year’s” technology for 500-800 USD (discounted to 400-600 or so).

They definitely used to go down, just not since Bitcoin morphed into a speculative mania.

I’m using an Rx6700xt which you can get for about £300 and it works fine.

Edit: try using ollama on your PC. If your CPU is capable, that software should work out the rest.

deleted by creator

It all makes sense now!

Good. Let’s keep this ball rolling.

I think this prompted investors to ask “where’s the ROI?”.

Current AI investment hype isn’t based on anything tangible. At least the amount of investment isn’t, it is absurd to think that trillion dollars that was put in the space already, even before that Softbanks deal is going to be returned. The models still hallucinate as it is inherent to the architecture, we are nowhere near replacing the workers but we got chatbots that “when they work sometimes, then they are kind of good?” and mediocre off-putting pictures. Is there any value? Sure, it’s not NFTs. But the correction might be brutal.

Interestingly enough, DeepSeek’s model is released just before Q4 earning’s call season, so we will see if it has a compounding effect with another statement from big players that they burned massive amount of compute and USD only to get milquetoast improvements and get owned by a small Chinese startup that allegedly can do all that for 5 mil.

I have a dirty suspicion that the “where’s the ROI?” talking point is actually a calculated and collaborated strategy by big wall street banks to panic retail investors to sell so they can gobble up shares at a discount - trump is going to be pumping (at minimum) hundreds of BILLIONS into these companies in the near future.

Call me a conspiracy guy, but I’ve seen this playbook many many times

I mean, I’m working on that tech and the evaluation boggles my mind. This is nowhere near worth what is put into it. It rides on empty promises that may or may not materialize (I can’t say with 100% certainty that a breakthrough happen), but current models are massively overvalued. I’ve seen that happen with ConvNets (Hinton saying we won’t need radiologists in five years in…2016, self-driving cars promised every two years, yadda yadda) but nothing to that scale.

Right - the entire stock market doesn’t make sense, doesn’t seem to stop Tesla or any of the other massively overvalued stocks. Btw stocks have been massively overvalued for over a decade, but that’s a different topic

You fogot NSFW content, many people are making money using it. There is also AI advertising using fake models, very lucrative business.

I’m not saying that it doesn’t have any uses but the costs outpace the investments done by a mile. Current LLM and vLLMs help with efficiency to a degree but this is not sustainable and the correction is overdue.

I was making a joke, I agree with you it is over hyped. It basically just takes the training data mixes it up and gives you a result. It is not the so called life changing thing that they are advertising. It is good for writing email though.

Ah, sorry, didn’t catch it ^^"

deleted by creator

I disagree.

Like it or hate it, crypto is here to stay.

And it’s actually one of the few technologies that, at least with some of the coins, empowers normal people.

It does empower normal people, unfortunately regulations make it harder to use. Try buying Monero, it is very hard to buy.

They have made it harder, but it’s not really hard.

Just buy any regulated crypto and convert. Cake Wallet makes it easy, but there are many other ways.

I myself hold Bitcoin and Monero.

Try buying Monero, it is very hard to buy.

- Acquire BTC (there are even ATMs for this in many countries)

- Trade for XMR using one of the many non-KYC services like WizardSwap or exch

I haven’t looked into whether that’s illegal in some jurisdictions but it’s really really easy, once you know that’s an option.

Or you could even just trade directly with anyone who owns XMR. Obviously easier for some people than others but it’s a real option.

Both of these methods don’t even require personal details like ID/name/phone number.

I disagree - before Bitcoin there was no venmo, cashapp, etc. It took weeks to move big money around. I’m not saying shit like NFT’s ever made sense, and meme coins are fucking stupid - unfortunately the crypto world has been taken over by scammers - but don’t shit on the technology

Wtf? Venmo / cashapp are descendent from PayPal which was released ages before any major crypto.

Forget bitcoin, Monero in my opinion is how crypto was supposed to be. Monero is untracable compared to bitcoin.

Helpfully, because bitcoin gets all the traderbro attention, monero has actually ended up being (relatively) stable because it has more of a purpose.

That is not the only reason it is stable, because all transactions are private, it doesn’t affect the price unlike bitcoin.

Since before bitcoin we’ve had Faster Payments in UK. I can transfer money directly to anyone else’s bank account and it’s effectively instant. It’s also free. Venmo and cashapp don’t serve a purpose here.

Same in NL most (all?) banks here have an app that lets you transfer money near instantly, create payment requests, execute payments for online orders by scanning a code, etc. It’s great I think.

It took weeks to move big money around.

Lol this is just either a statement out of ignorance or a complete lie. Wire transfers didn’t take weeks. Checks didn’t take weeks to clear, and most people aren’t moving “big money” via fucking cash app either.

“Big money” isn’t paying half for an Uber unless you’re like 16 years old.

It’s not a statement out of ignorance and it’s not a lie. Most people don’t try to move huge money around so I’ll illustrate what I had to go through - I had a huge sum of money I had in an online investing company. I had a very time critical situation I needed the money for, so I cashed out my investments - the company only cashed out via check sent via registered mail (maybe they did transfers for smaller amounts, but for the sum I had it was check only). It took almost two weeks for me to get that check. When I deposited that check with my bank, the bank had a mandatory 5-7 business day wait to clear (once again, smaller checks they deposit immediately and then do the clearing process - BIG checks they don’t do that, so I had to wait another week). Once cleared, I had to move the money to another bank, and guess what - I couldn’t take that much cash out, daily transfers are capped at like $1500 or whatever they were, so I had to get a check from the bank. The other bank made me wait another 5-7 business day as well, because the check was just too damn big.

4 weeks it took me to move huge money around, and of course I missed the time critical thing I really needed the money for.

I’m just a random person, not a business, no business accounts, etc. The system just isn’t designed for small folk to move big money

It doesn’t take weeks to do a wire transfer. You had some one off weirdo situation and you’re pretending like it’s universally applicable. It took me longer to cash out 5k of doge a couple of years ago than it takes me to do “big money” transfers.

In europe i can send any amount (like up to 100k ) in just a few days since 20 years, to anyone with a bank account in europe, from my computer or phone.

Also, since 2025 every bank allows me to send istant money to any other bank account. For free.

Because they have to compete with crypto.

Absolutely my point

For the istant money from 2025 I Agree, but the bank transfer part is like that since 20 years

So not any amount then. My point stands

True, never had a need in my life to move such an amount tho

It depends on the bank and the amount you are trying to move.There are banks that might take a week (five business days) or so though very rare and there are banks that might do it instantly. I once used a bank in the US to move money and they sent a physical check and this was domestic not international.

Edit: I thought he meant a week not weeks. Normally a max of five working days.

Wire transfers are how you handle actual big money transfers and it doesn’t take weeks.

International transfers do take time, domestic is instant. International transfer typically take 1-5 days.

1-5 days isn’t “weeks”.

Big money is being held because of anti-money laundering, not because of technology.

Money is held so that interest can be earned on the float.

I am not taking about money being held, I talking about regulations and horrible banks not technology. Yes, the current technology allows you instant transfer, but it still depends on the bank. For example some banks allow free international transfers while others require a small fee, some banks you can do the transfer online while others you have to go to the branch in person. You don’t have to go through a bank with crypto, sometimes it is faster and it is definitely more private.

deleted by creator

I think that the technology itself has been widely adopted and used. There are many examples in medicine, military, entertainment. But OpenAI and other hyperscalers are a bad business that burns through a loooot of cash. Same with Meta AI program. And while this has been a norm with tech darlings that they usually don’t break even for a long time, what’s unprecedented is the rate of loss and further calls for even more money even though there isn’t any clear path from what we have to AGI. All hangs on Altman and other biz-dev vague promises, threats and a “vibe” that they create.

Finally a proper good open source model as all tech should be

Nice. Happy news today

nvidia falling doesn’t make much sense to me, GPUs are still needed to run the model. Unless Nvidia is involved in its own AI model or something?

DeepSeek proved you didn’t need anywhere near as much hardware to train or run an even better AI model

Imagine what would happen to oil prices if a manufacturer comes out with a full ice car that can run 1000 miles per gallon… Instead of the standard American 3 miles per 1.5 gallons hehehe

https://en.wikipedia.org/wiki/Jevons_paradox

more efficient use of oil will lead to increased demand, and will not slow the arrival or the effects of peak oil.

Energy demand is infinite and so is the demand for computing power because humans always want to do MORE.

Yes but that’s not the point… If you can buy a house for $1000 nobody would buy a similar house for $500000

Eventually the field would even out and maybe demand would surpass current levels, but for the time being, Nvidia’s offer seem to be a giant surplus and speculators will speculate

If you need far less computing power to train the models, far less gpus are needed, and that hurts nvidia

does it really need less power? I’m playing around with it now and I’m pretty impressed so far. it can do math, at least.

That’s the claim, it has apparently been trained using a fraction of the compute power of the GPT models and achieves similar results.

fascinating. my boss really bought into the tech bro bullshit, every time we get coffee as a team, he’s always going on and on about how chatGPT will be the savior of humanity, increase productivity so much that we’ll have a 2 day work week, blah blah blah.

I’ve been on his shit list lately because i had to take some medical leave and didn’t deliver my project on time.

Now that this thing is open sourced, I can bring it to him, tell him it out performs even chatgpt O1 or whatever it is, and tell him that we can operate it locally. I’ll be off the shit list and back into his good graces and maybe even get a raise.

Make sure you explain open source to them so they know it’s not spyware.

Your boss sounds like he buys into bullshit for a living. Maybe that’s what drew him to the job, lol.

I think believing in our corporate AI overlords is even overshadowed by believing those same corporations would pass the productivity gains on to their employees.

But I feel like that will just lead to more training with the same (or more) hardware with a more efficient model. Bitcoin mining didn’t slow down only because it got harder. However I don’t know enough about the training process. I assume more efficient use of the hardware would allow for larger models to be trained on the same hardware and training data?

They’ll probably do that, but that’s assuming we aren’t past the point of diminishing returns.

The current LLM’s are pretty basic in how they work, and it could be that with the current training we’re near what they’ll ever be capable of. They’ll of course invest a billion in training a new generation, but if it’s only marginally better than the current one, they won’t keep investing billions into it if it doesn’t really improve the results.

Well, you still need the right kind of hardware to run it, and my money has been on AMD to deliver the solutions for that. Nvidia has gone full-blown stupid on the shit they are selling, and AMD is all about cost and power efficiency, plus they saw the writing on the wall for Nvidia a long time ago and started down the path for FPGA, which I think will ultimately be the same choice for running this stuff.

Built a new PC for the first time in a decade last spring. Went full team red for the first time ever. Very happy with that choice so far.

And it may yet swing back the other way.

Twenty or so years ago, there was a brief period when going full AMD (or AMD+ATI as it was back then; AMD hadn’t bought ATI yet) made sense, and then the better part of a decade later, Intel+NVIDIA was the better choice.

And now I have a full AMD PC again.

Intel are really going to have to turn things around in my eyes if they want it to swing back, though. I really do not like the idea of a CPU hypervisor being a fully fledged OS that I have no access to.

From a “compute” perspective (so not consumer graphics), power… doesn’t really matter. There have been decades of research on the topic and it almost always boils down to “Run it at full bore for a shorter period of time” being better (outside of the kinds of corner cases that make for “top tier” thesis work).

AMD (and Intel) are very popular for their cost to performance ratios. Jensen is the big dog and he prices accordingly. But… while there is a lot of money in adapting models and middleware to AMD, the problem is still that not ALL models and middleware are ported. So it becomes a question of whether it is worth buying AMD when you’ll still want/need nVidia for the latest and greatest. Which tends to be why those orgs tend to be closer to an Azure or AWS where they are selling tiered hardware.

Which… is the same issue for FPGAs. There is a reason that EVERYBODY did their best to vilify and kill opencl and it is not just because most code was thousands of lines of boilerplate and tens of lines of kernels. Which gets back to “Well. I can run this older model cheap but I still want nvidia for the new stuff…”

Which is why I think nvidia’s stock dropping is likely more about traders gaming the system than anything else. Because the work to use older models more efficiently and cheaply has already been a thing. And for the new stuff? You still want all the chooch.

Your assessment is missing the simple fact that FPGA can do things a GPU cannot faster, and more cost efficiently though. Nvidia is the Ford F-150 of the data center world, sure. It’s stupidly huge, ridiculously expensive, and generally not needed unless it’s being used at full utilization all the time. That’s like the only time it makes sense.

If you want to run your own models that have a specific purpose, say, for scientific work folding proteins, and you might have several custom extensible layers that do different things, N idia hardware and software doesn’t even support this because of the nature of Tensorrt. They JUST announced future support for such things, and it will take quite some time and some vendor lock-in for models to appropriately support it…OR

Just use FPGAs to do the same work faster now for most of those things. The GenAI bullshit bandwagon finally has a wheel off, and it’s obvious people don’t care about the OpenAI approach to having one model doing everything. Compute work on this is already transitioning to single purpose workloads, which AMD saw coming and is prepared for. Nvidia is still out there selling these F-150s to idiots who just want to piss away money.

Your assessment is missing the simple fact that FPGA can do things a GPU cannot faster

Yes, there are corner cases (many of which no longer exist because of software/compiler enhancements but…). But there is always the argument of “Okay. So we run at 40% efficiency but our GPU is 500% faster so…”

Nvidia is the Ford F-150 of the data center world, sure. It’s stupidly huge, ridiculously expensive, and generally not needed unless it’s being used at full utilization all the time. That’s like the only time it makes sense.

You are thinking of this like a consumer where those thoughts are completely valid (just look at how often I pack my hatchback dangerously full on the way to and from Lowes…). But also… everyone should have that one friend with a pickup truck for when they need to move or take a load of stuff down to the dump or whatever. Owning a truck yourself is stupid but knowing someone who does…

Which gets to the idea of having a fleet of work vehicles versus a personal vehicle. There is a reason so many companies have pickup trucks (maybe not an f150 but something actually practical). Because, yeah, the gas consumption when you are just driving to the office is expensive. But when you don’t have to drive back to headquarters to swap out vehicles when you realize you need to go buy some pipe and get all the fun tools? It pays off pretty fast and the question stops becoming “Are we wasting gas money?” and more “Why do we have a car that we just use for giving quotes on jobs once a month?”

Which gets back to the data center issue. The vast majority DO have a good range of cards either due to outright buying AMD/Intel or just having older generations of cards that are still in use. And, as a consumer, you can save a lot of money by using a cheaper node. But… they are going to still need the big chonky boys which means they are still going to be paying for Jensen’s new jacket. At which point… how many of the older cards do they REALLY need to keep in service?

Which gets back down to “is it actually cost effective?” when you likely need

I’m thinking of this as someone who works in the space, and has for a long time.

An hour of time for a g4dn instance in AWS is 4x the cost of an FPGA that can do the same work faster in MOST cases. These aren’t edge cases, they are MOST cases. Look at a Sagemaker, AML, GMT pricing for the real cost sinks here as well.

The raw power and cooling costs contribute to that pricing cost. At the end of the day, every company will choose to do it faster and cheaper, and nothing about Nvidia hardware fits into either of those categories unless you’re talking about milliseconds of timing, which THEN only fits into a mold of OpenAI’s definition.

None of this bullshit will be a web-based service in a few years, because it’s absolutely unnecessary.

And you are basically a single consumer with a personal car relative to those data centers and cloud computing providers.

YOUR workload works well with an FPGA. Good for you, take advantage of that to the best degree you can.

People;/companies who want to run newer models that haven’t been optimized for/don’t support FPGAs? You get back to the case of “Well… I can run a 25% cheaper node for twice as long?”. That isn’t to say that people shouldn’t be running these numbers (most companies WOULD benefit from the cheaper nodes for 24/7 jobs and the like). But your use case is not everyone’s use case.

And, it once again, boils down to: If people are going to require the latest and greatest nvidia, what incentive is there in spending significant amounts of money getting it to work on a five year old AMD? Which is where smaller businesses and researchers looking for a buyout come into play.

At the end of the day, every company will choose to do it faster and cheaper, and nothing about Nvidia hardware fits into either of those categories unless you’re talking about milliseconds of timing, which THEN only fits into a mold of OpenAI’s definition.

Faster is almost always cheaper. There have been decades of research into this and it almost always boils down to it being cheaper to just run at full speed (if you have the ability to) and then turn it off rather than run it longer but at a lower clock speed or with fewer transistors.

And nVidia wouldn’t even let the word “cheaper” see the glory that is Jensen’s latest jacket that costs more than my car does. But if you are somehow claiming that “faster” doesn’t apply to that company then… you know nothing (… Jon Snow).

unless you’re talking about milliseconds of timing

So… its not faster unless you are talking about time?

Also, milliseconds really DO matter when you are trying to make something responsive and already dealing with round trip times with a client. And they add up quite a bit when you are trying to lower your overall footprint so that you only need 4 notes instead of 5.

They don’t ALWAYS add up, depending on your use case. But for the data centers that are selling computers by time? Yeah,. time matters.

So I will just repeat this: Your use case is not everyone’s use case.

I mean…I can shut this down pretty simply. Nvidia makes GPUs that are currently used as a blunt force tool, which is dumb, and now that the grift has been blown, OpenAI, Anthropic, Meta, and all the others trying to make a business center around a really simple tooling that is open source, are about to be under so much scrutiny for the cost that everyone will figure out that there are cheaper ways to do this.

Plus AMD, Con Nvidia. It’s really simple.

Ah. Apologies for trying to have a technical conversation with you.

I’m way behind on the hardware at this point.

Are you saying that AMD is moving toward an FPGA chip on GPU products?

While I see the appeal - that’s going to dramatically increase cost to the end user.

No.

GPU is good for graphics. That’s what is designed and built for. It just so happens to be good at dealing with programmatic neural network tasks because of parallelism.

FPGA is fully programmable to do whatever you want, and reprogram on the fly. Pretty perfect for reducing costs if you have a platform that does things like audio processing, then video processing, or deep learning, especially in cloud environments. Instead of spinning up a bunch of expensive single-phroose instances, you can just spin up one FPGA type, and reprogram on the fly to best perform on the work at hand when the code starts up. Simple.

AMD bought Xilinx in 2019 when they were still a fledgling company because they realized the benefit of this. They are now selling mass amounts of these chips to data centers everywhere. It’s also what the XDNA coprocessors on all the newer Ryzen chips are built on, so home users have access to an FPGA chip right there. It’s efficient, cheaper to make than a GPU, and can perform better on lots of non-graphic tasks than GPUs without all the massive power and cooling needs. Nvidia has nothing on the roadmap to even compete, and they’re about to find out what a stupid mistake that is.

Is XDNA actually an FPGA? My understanding was that it’s an ASIC implementation of the Xilinx NPU IP. You can’t arbitrarily modify it.

Yep

Huh. Everything I’m reading seems to imply it’s more like a DSP ASIC than an FPGA (even down to the fact that it’s a VLIW processor) but maybe that’s wrong.

I’m curious what kind of work you do that’s led you to this conclusion about FPGAs. I’m guessing you specifically use FPGAs for this task in your work? I’d love to hear about what kinds of ops you specifically find speedups in. I can imagine many exist, as otherwise there wouldn’t be a need for features like tensor cores and transformer acceleration on the latest NVIDIA GPUs (since obviously these features must exploit some inefficiency in GPGPU architectures, up to limits in memory bandwidth of course), but also I wonder how much benefit you can get since in practice a lot of features end up limited by memory bandwidth, and unless you have a gigantic FPGA I imagine this is going to be an issue there as well.

I haven’t seriously touched FPGAs in a while, but I work in ML research (namely CV) and I don’t know anyone on the research side bothering with FPGAs. Even dedicated accelerators are still mostly niche products because in practice, the software suite needed to run them takes a lot more time to configure. For us on the academic side, you’re usually looking at experiments that take a day or a few to run at most. If you’re now spending an extra day or two writing RTL instead of just slapping together a few lines of python that implicitly calls CUDA kernels, you’re not really benefiting from the potential speedup of FPGAs. On the other hand, I know accelerators are handy for production environments (and in general they’re more popular for inference than training).

I suspect it’s much easier to find someone who can write quality CUDA or PTX than someone who can write quality RTL, especially with CS being much more popular than ECE nowadays. At a minimum, the whole FPGA skillset seems much less common among my peers. Maybe it’ll be more crucial in the future (which will definitely be interesting!) but it’s not something I’ve seen yet.

Looking forward to hearing your perspective!

I remember Xilinx from way back in the 90s when I was taking my EE degree, so they were hardly a fledgling in 2019.

Not disputing your overall point, just that detail because it stood out for me since Xilinx is a name I remember well, mostly because it’s unusual.

They were kind of pioneering the space, but about to collapse. AMD did good by scooping them up.

FPGAs have been a thing for ages.

If I remember it correctly (I learned this stuff 3 decades ago) they were basically an improvement on logic circuits without clocks (think stuff like NAND and XOR gates - digital signals just go in and the result comes out on the other side with no delay beyond that caused by analog elements such as parasitical inductances and capacitances, so without waiting for a clock transition).

The thing is, back then clocking of digital circuits really took off (because it’s WAY simpler to have things done one stage at a time with a clock synchronizing when results are read from one stage and sent to the next stage, since different gates have different delays and so making sure results are only read after the slowest path is done is complicated) so all CPU and GPU architecture nowadays are based on having a clock, with clock transitions dictating things like when is each step of processing a CPU/GPU instruction started.

Circuits without clocks have the capability of being way faster than circuits with clocks if you can manage the problem of different digital elements having different delays in producing results I think what we’re seeing here is a revival of using circuits without clocks (or at least with blocks of logic done between clock transitions which are much longer and more complex than the processing of a single GPU instruction).

Yes, but I’m not sure what your argument is here.

Least resistance to an outcome (in this case whatever you program it to do) is faster.

Applicable to waterfall flows, FPGA makes absolute sense for the neural networks as they operate now.

I’m confused on your argument against this and why GPU is better. The benchmarks are out in the world, go look them up.

I’m not making an argument against it, just clarifying were it sits as technology.

As I see it, it’s like electric cars - a technology that was overtaken by something else in the early days when that domain was starting even though it was the first to come out (the first cars were electric and the ICE engine was invented later) and which has now a chance to be successful again because many other things have changed in the meanwhile and we’re a lot closes to the limits of the tech that did got widely adopted back in the early days.

It actually makes a lot of sense to improve the speed of what programming can do by getting it to be capable of also work outside the step-by-step instruction execution straight-jacked which is the CPU/GPU clock.

I think the idea is that you can optimise it for the model or maybe? (Guessing mostly)

Great, a stock sale.

This has nothing to do with DeepSeek. The world has run out of flashy leather jackets for Jensen to wear, so nvidia is toast.

Okay, cool…

So, how much longer before Nvidia stops slapping a “$500-600 RTX XX70” label on a $300 RTX XX60 product with each new generation?

The thinly-veiled 75-100% price increases aren’t fun for those of us not constantly-touching-themselves over AI.

What the fuck are markets when you can automate making money on them???

Ive been WTF about the stock market for a long time but now it’s obviously a scam.

The stock market is nothing more than a barometer for the relative peace of mind of rich people.

Economics is a social science not a hard science, it’s highly reactive to rumors and speculation. The stock market kind of does just run on vibes.

It’s fun seeing these companies take a hit and the bubble deflate, but long term won’t this just make AI a more alluring form of enshittification to a wider audience?

Yeah I’d say so - but you can’t put the genie in the bottle.

It’s just fighting for who gets the privilege to do so

ROFL 🤣😂🤣😂🤣😂🤣 eat shit, Huang

Good. Nvidia has grown greedy and fat.

Good. That shit is way overvalued.

There is no way that Nvidia are worth 3 times as much as TSMC, the company that makes all their shit and more besides.

I’m sure some of my market tracker funds will lose value, and they should, because they should never have been worth this much to start with.

It’s because Nvidia is an American company and also because they make final stage products. American companies right now are all overinflated and almost none of the stocks are worth what they’re at because of foreign trading influence.

As much as people whine about inflation here, the US didn’t get hit as bad as many other countries and we recovered quickly which means that there is a lot of incentive for other countries to invest here. They pick our top movers, they invest in those. What you’re seeing is people bandwagoning onto certain stocks because the consistent gains create more consistent gains for them.

The other part is that yes, companies who make products at the end stage tend to be worth a lot more than people trading more fundamental resources or parts. This is true of almost every industry except oil.

The US is also a regulations haven compared to other developed economies, corporations get away with shit in most places but America is on a whole other level of regulatory capture.

It is also because the USA is the reserve currency of the world with open capital markets.

Savers of the world (including countries like Germany and China who have excess savings due to constrained consumer demand) dump their savings into US assets such as stocks.

This leads to asset bubbles and an uncompetitively high US dollar.

The current administration is working real hard on removing trust and value of anything American.

Yuuuuup…might just put their market into…🤔 Freefall?

The root problem they are trying to fix is real (systemic trade imbalances) but the way they are trying to fix it is terrible and won’t work.

-

Only a universally applied tariff would work in theory but would require other countries not to retaliate (there will 100% be retaliation).

-

It doesn’t really solve the root cause, capital inflows into the USA rather than purchasing US goods and services.

-

Trump wants to maintain being the reserve currency which is a big part of the problem (the strength of currency may not align with domestic conditions, i.e. high when it needs to be low).

-

I consider myself to be a Saver of the World.

Our Lord and Saver

That probably also means that when the trend reverses it will turn into a rout.

Yeah lot of companies are way overvalued look at Carvana, how is this company worth 50 billion?

But TSMC is “encumbered” by all of these plant and equipment mate 🤡