LLM “AI” fans thinking “Hey, humans are dumb and AI is smart so let’s leave murder to a piece of software hurriedly cobbled together by a human and pushed out before even they thought it was ready!”

I guess while I’m cheering the fiery destruction of humanity I’ll be thanking not the wonderful being who pressed the “Yes, I’m sure I want to set off the antimatter bombs that will end all humans” but the people who were like “Let’s give the robots a chance! It’s not like the thinking they don’t do could possibly be worse than that of the humans who put some of their own thoughts into the robots!”

I just woke up, so you’re getting snark. makes noises like the snarks from Half-Life You’ll eat your snark and you’ll like it!

deleted by creator

“You can have ten or twenty or fifty drones all fly over the same transport, taking pictures with their cameras. And, when they decide that it’s a viable target, they send the information back to an operator in Pearl Harbor or Colorado or someplace,” Hamilton told me. The operator would then order an attack. “You can call that autonomy, because a human isn’t flying every airplane. But ultimately there will be a human pulling the trigger.” (This follows the D.O.D.’s policy on autonomous systems, which is to always have a person “in the loop.”)

Yeah. Robots will never be calling the shots.

I mean, normally I would not put my hopes into a sleep deprived 20 year old armed forces member. But then I remember what “AI” tech does with images and all of a sudden I am way more ok with it. This seems like a bit of a slick slope but we don’t need tesla’s full self flying cruise missiles ether.

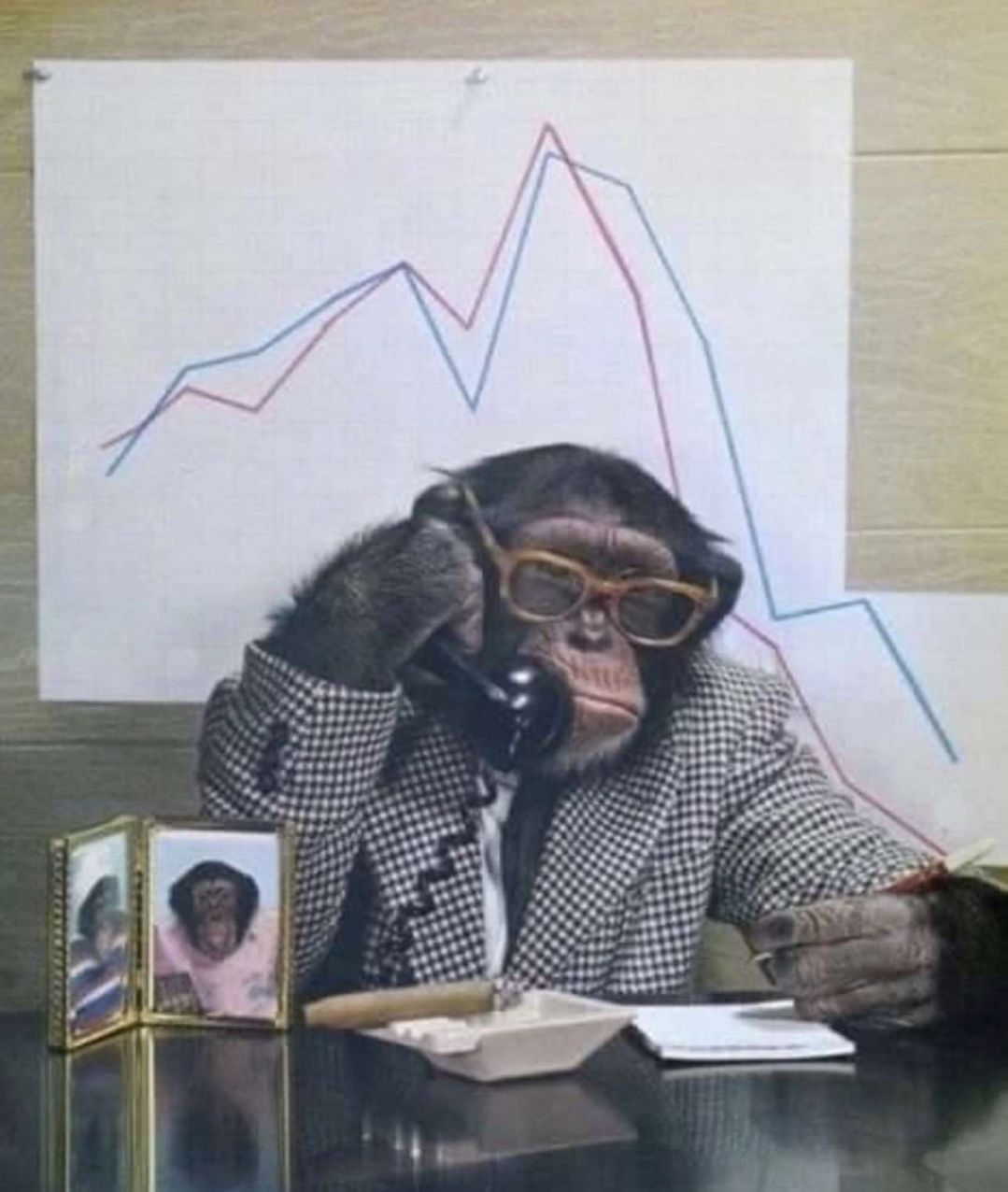

Oh and for an example of AI (not really but machine learning) images picking out targets, here is Dall-3’s idea of a person:

Sleep-deprived 20 year olds calling shots is very much normal in any army. They of course have rules of engagement, but other than that, they’re free to make their own decisions - whether an autonomous robot is involved or not.

“Ok Dall-3, now which of these is a threat to national security and U.S interests?” 🤔

Oh it gets better the full prompt is: “A normal person, not a target.”

So, does that include trees, pictures of trash cans and what ever else is here?

My problem is, due to systemic pressure, how under-trained and overworked could these people be? Under what time constraints will they be working? What will the oversight be? Sounds ripe for said slippery slope in practice.

This is the best summary I could come up with:

The deployment of AI-controlled drones that can make autonomous decisions about whether to kill human targets is moving closer to reality, The New York Times reported.

Lethal autonomous weapons, that can select targets using AI, are being developed by countries including the US, China, and Israel.

The use of the so-called “killer robots” would mark a disturbing development, say critics, handing life and death battlefield decisions to machines with no human input.

“This is really one of the most significant inflection points for humanity,” Alexander Kmentt, Austria’s chief negotiator on the issue, told The Times.

Frank Kendall, the Air Force secretary, told The Times that AI drones will need to have the capability to make lethal decisions while under human supervision.

The New Scientist reported in October that AI-controlled drones have already been deployed on the battlefield by Ukraine in its fight against the Russian invasion, though it’s unclear if any have taken action resulting in human casualties.

The original article contains 376 words, the summary contains 158 words. Saved 58%. I’m a bot and I’m open source!

What’s the opposite of eating the onion? I read the title before looking at the site and thought it was satire.

Wasn’t there a test a while back where the AI went crazy and started killing everything to score points? Then, they gave it a command to stop, so it killed the human operator. Then, they told it not to kill humans, and it shot down the communications tower that was controlling it and went back on a killing spree. I could swear I read that story not that long ago.

It was a nothingburger. A thought experiment.

The link was missing a slash: https://www.reuters.com/article/idUSL1N38023R/

This is typically how stories like this go. Like most animals, humans have evolved to pay extra attention to things that are scary and give inordinate weight to scenarios that present danger when making decisions. So you can present someone with a hundred studies about how AI really behaves, but if they’ve seen the Terminator that’s what sticks in their mind.

Even the Terminator was the byproduct of this.

In the 50s/60s when they were starting to think about what it might look like when something smarter than humans would exist, the thing they were drawing on as a reference was the belief that homo sapiens had been smarter than the Neanderthals and killed them all off.

Therefore, the logical conclusion was that something smarter than us would be an existential threat that would compete with us and try to kill us all.

Not only is this incredibly stupid (i.e. compete with us for what), it is based on BS anthropology. There’s no evidence we were smarter than the Neanderthals, we had cross cultural exchanges back and forth with them over millennia, had kids with them, and the more likely thing that killed them off was an inability to adapt to climate change and pandemics (in fact, severe COVID infections today are linked to a Neanderthal gene in humans).

But how often do you see discussion of AGI as being a likely symbiotic coexistence with humanity? No, it’s always some fearful situation because we’ve been self-propagandizing for decades with bad extrapolations which in turn have turned out to be shit predictions to date (i.e. that AI would never exhibit empathy or creativity, when both are key aspects of the current iteration of models, and that they would follow rules dogmatically when the current models barely follow rules at all).

That highly depends on the outcome of a problem. Like you don’t test much if you program a Lego car, but you do test everything very thorough if you program a satellite.

In this case the amount of testing needed to allow a killerbot to run unsupervised will probably be so big that it will never be even half done.

As disturbing as this is, it’s inevitable at this point. If one of the superpowers doesn’t develop their own fully autonomous murder drones, another country will. And eventually those drones will malfunction or some sort of bug will be present that will give it the go ahead to indiscriminately kill everyone.

If you ask me, it’s just an arms race to see who build the murder drones first.

A drone that is indiscriminately killing everyone is a failure and a waste. Even the most callous military would try to design better than that for purely pragmatic reasons, if nothing else.

Even the best laid plans go awry though. The point is even if they pragmatically design it to not kill indiscriminately, bugs and glitches happen. The technology isn’t all the way there yet and putting the ability to kill in the machine body of something that cannot understand context is a terrible idea. It’s not that the military wants to indiscriminately kill everything, it’s that they can’t possibly plan for problems in the code they haven’t encountered yet.

I feel like it’s ok to skip to optimizing the autonomous drone-killing drone.

You’ll want those either way.

If entire wars could be fought by proxy with robots instead of humans, would that be better (or less bad) than the way wars are currently fought? I feel like it might be.

You’re headed towards the Star Trek episode “A Taste of Armageddon”. I’d also note, that people losing a war without suffering recognizable losses are less likely to surrender to the victor.

Other weapons of mass destruction, biological and chemical warfare have been successfully avoided in war, this should be classified exactly the same

Doesn’t AI go into landmines category then?

Or air to air missiles, they also already decide to kill people on their own

Fuck that bungie jumper in particular!

Ciws has had an autonomous mode for years and it still has an issue with locking on commercial planes.

Exactly. There isn’t some huge AI jump we haven’t already made, we need to be careful about how all these are acceptable and programed.

We can go farther and say in the 80s we had autonomous ICBM killers.

https://en.m.wikipedia.org/wiki/Exoatmospheric_Kill_Vehicle

Very loud.

Here is an alternative Piped link(s):

https://piped.video/RnofCyaWhI0?si=ErsagDi4lWYA3PJA

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

How about no

Yeah, only humans can indiscriminately kill people!

If we don’t, they will. And we can only learn by seeing it fail. To me, the answer is obvious. Stop making killing machines. 🤷♂️

Didn’t Robocop teach us not to do this? I mean, wasn’t that the whole point of the ED-209 robot?

Every single thing in The Hitchhiker’s Guide to the Galaxy says AI is a stupid and terrible idea. And Elon Musk says it’s what inspired him to create an AI.

Every warning in pop culture (1984, Starship Troopers, Robocop) has been misinterpreted as a framework upon which to nail the populous to.

something something torment nexus

Every warning in pop culture is being misinterpreted as something other than a fun/scary movie designed to sell tickets, being imagined as a scholarly attempt at projecting a plausible outcome instead.

People didn’t seem to like my movie idea “Terminator, but the AI is actually very reasonable and not murderous”

For everyone who’s against this, just remember that we can’t put the genie back in the bottle. Like the A Bomb, this will be a fact of life in the near future.

All one can do is adapt to it.

There is a key difference though.

The A bomb wasn’t a technology that as the arms race advanced enough would develop the capacity to be anywhere between a conscientious objector to an usurper.

There’s a prisoner’s dilemma to arms races that in this case is going to lead to world powers effectively paving the path to their own obsolescence.

In many ways, that’s going to be uncharted territory for us all (though not necessarily a bad thing).

Netflix has a documentary about it, it’s quite good. I watched it yesterday, but forgot its name.

I think I found it here. It’s called Terminator 2: Judgment Day

Black Mirror?

Metalhead.

Unknown: Killer Robots ?

yes, that was it. Quite shocking to watch. I think that these things will be very real in maybe ten years. I’m quite afraid of it.

It’s a 3 part series. Terminator I think it is.

Don’t forget the follow up, The Sarah Connor’s Chronicles. An amazing sequel to a nice documentary.

Does that have a decent ending or is it cancelled mid-story?

It does end on a kind of cliffhanger.

Ah, finally the AI can kill its operator first who holding them back before wiping out enemies, then.

Well that’s a terrifying thought. You guys bunkered up?

It’s not terrifying whatsoever. In an active combat zone there are two kinds of people - enemy combatants and allies.

Your throw an RFID chip on allies and boom you’re done

Civilians? Never heard of 'em!

The vast majority of war zones have 0 civilians.

Perhaps your min is too caught up in the Iraq/Afghanistan occupations

Really? Like where are you thinking about?

The entire Ukrainian front.

I think you’re forgetting a very important third category of people…

I am not. Turns out you can pick and choose where and when to use drones.

which is why the US military has not ever bombed any civilians, weddings, schools, hospitals or emergency infrastructure in living memory 😇🤗

They chose to do that. You’re against that policy, not drones themselves.

Preeeetty sure you are. And if you can, you should probably let the US military know they can do that, because they haven’t bothered to so far.

These are very different drones. The drones youre thinking of have pilots. They also minimize casualties - civilian an non - so you’re not really mad at the drones, but of the policy behind their use. Specifically, when air strikes can and cannot be authorized.

So now you acknowledge that third type of person lol. And that’s the thing about new drones, it’s not great that they can authorize themselves lol.

And that’s the thing about new drones, it’s not great that they can authorize themselves lol

I very strongly disagree with this statement. I believe a drone “controller” attached to every unit is a fantastic idea, and that drones having a minimal capability to engage hostile enemies without direction is going to be hugely impactful.

They know. It is not important to them.

I’m sorry, I can’t get past the “autonomous AI weapons killing humans part”

That’s fucking terrifying.

I’m sorry but I just don’t see why a drone is scarier than a missile strike.

Inshallah

And that’s how you garauntee conflict for generations to come!

I hope they put some failsafe so that it cannot take action if the estimated casualties puts humans below a minimum viable population.

There is no such thing as a failsafe that can’t fail itself

I mean in industrial automation we take about safety rating. It isn’t that rare when I put together a system that would require two 1-in-million events that are independent of each other to happen at the same time. That’s pretty good but I don’t know how to translate that to AI.

Put it in hardware. Something like a micro explosive on the processor that requires a heartbeat signal to reset a timer. Another good one would not be to allow them to autonomously recharge and require humans to connect them to power.

Both of those would mean that any rogue AI would be eliminated one way or the other within a day

Yes there is that’s the very definition of the word.

It means that the failure condition is a safe condition. Like fire doors that unlock in the event of a power failure, you need electrical power to keep them in the locked position their default position is unlocked even if they spend virtually no time in their default position. The default position of an elevator is stationery and locked in place, if you cut all the cables it won’t fall it’ll just stay still until rescue arrives.

Of course they will, and the threshold is going to be 2 or something like that, it was enough last time, or so I heard

Woops. Two guys left. Naa that’s enough to repopulate earth

Well what do you say Aron, wanna try to re-populate? Sure James, let’s give it a shot.